Task-Oriented Evaluation of Perception Performance for Automated Driving

Bosch Research Blog | Posted by Maria Lyssenko, 2023-03-23

Our perception algorithms need to be best where it really matters. This is why the evaluation of perception performance should consider the importance of to-be-detected objects for the automated vehicle’s current task.

Automated vehicles operating on public roads need to be safe: they must not endanger other traffic participants. This means the vehicle needs to safely interact with all traffic participants in its environment. When looking at real driving data, it becomes apparent that some traffic participants are more safety relevant than others. For example, look at the image below. Here, the pedestrian close to the AV’s driving corridor marked by the red box is highly safety relevant. Whereas the pedestrian far away on the other side of the road marked by the green box is currently not important. For our analyzes we refer to Argoverse 1.1.

In scientific literature, much work has been done regarding classifying the importance of traffic participants in a given traffic situation. Most of this work expresses importance in the form of metrics. Most of these metrics originate from the motion planning domain focusing on the dynamic interaction of traffic participants with an AV. For a more detailed description of such metrics and their use in the motion planning domain, we refer to Patrick’s blog post.

The basis for safe motion planning is that the system perceives its environment accurately, especially when it concerns safety. As such, the importance determined by these metrics also applies to perception functions. In this blog post, we describe our work on integrating such metrics into the evaluation of perception functions.

Let us consider pedestrian detection in urban environments as an example. Here, the interaction with vulnerable road users is especially safety critical. Usually, the performance of such a detection function is evaluated based on mean average performance over a test dataset that contains a number of test images with pedestrians. While such a mean average performance is useful for a general evaluation, we know from above that detection performance matters, especially for safety-critical interactions. As a consequence, we additionally need an evaluation that explicitly considers the safety-relevance of pedestrians for the current driving task and the resulting interactions.

Therefore, our work incorporates metrics describing the safety relevance of other traffic participants into the evaluation of our detector. In an initial analysis, we used the distance of pedestrians to the AV as a simple proxy for their relevance.

While this already provides valuable insight, we wanted to additionally consider human motion and the resulting interaction, visualized in the animation.The key advantage of using metrics to characterize the interactions as described above is that we can leverage information from the motion domain while being able to decouple the perception evaluation from a concrete motion planning system.

A key component of these metrics is models characterizing the possible future behavior of pedestrians in relation to the AV’s current driving task. As the exact future behavior of pedestrians cannot be known, we leverage best-in-class reachability analyses based on so-called reachable sets that may efficiently incorporate the uncertainty about physically plausible future behavior.

UnCoVerCPS

We have been investigating reachability for applications in robotics and autonomous driving at Bosch research for several years. Within the Horizon 2020 Project, UnCoVerCPS, we leveraged (online) reachability for safe path planning. We showed that these approaches are interesting in various robotics applications, e.g., for mobile service robots as described in Provably safe motion of mobile robots in human environments.

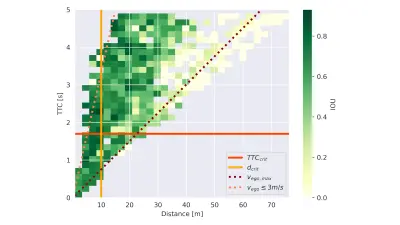

Our approach can be applied to all pedestrian datasets that also include information from the motion domain such as position, orientation and velocity of pedestrians. Ideally, the dataset uses sequences of consecutive images such that interactions can be analyzed as they unfold over time. For each of the images in the dataset, we calculate the metrics as described above based on the information from the motion domain. Then, we enrich the pedestrians in all images with the calculated metric values. In this extended dataset, we may then identify and focus on all safety-relevant pedestrians as shown in the figure below. In this example, we select all pedestrians that could enter the AV’s driving corridor in front of the AV within the next 5 seconds denoted by the time-to-collision (TTC). We additionally relate the TTC to the distance of the pedestrians.

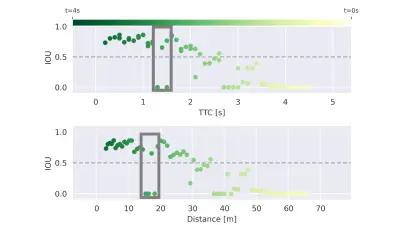

Based on these results, we can identify key interactions between the AV and a pedestrian where the current detection performance is insufficient. For example, we may select interactions where we encounter consecutive misdetections in a safety-critical region as shown in the figure below. Here, we encounter three misdetections for a pedestrian that could enter the driving corridor within the next 1.5s – 2s, making this a highly safety-relevant interaction.

Our approach allows developers of perception functions to identify key interactions where performance is crucial for safety as a basis for further improving the detection function. While we discuss our approach in the context of verification and test data sets, similar considerations can be applied to the training of deep-learning-based detectors.

You can learn more about general testing of visual perception functions for automated driving here from a recent journal article.

V&V approaches such as explicitly focusing on safety relevance for the evaluation of the perception function are fundamental to AI safety.

Loading the video requires your consent. If you agree by clicking on the Play icon, the video will load and data will be transmitted to Google as well as information will be accessed and stored by Google on your device. Google may be able to link these data or information with existing data.

Also interested in doing your Ph.D. studies with us?

Get involved in cutting-edge research at Bosch within an attractive Ph.D. program.

Round about 250 highly motivated and excellent Ph.D. candidates are researching with us. In close cooperation with universities we combine the best of both worlds, the academic and the industrial one. Besides of expert level supervision, there´s a huge Bosch Ph.D. Network to get in touch and interact with, for example in self-organized conferences, training measures and social activities. We are dedicated to finding answers to tomorrow’s questions and offer an environment where creativity knows no bounds. Are you ready to join us in shaping the future? Your innovative spirit and curiosity are just what we’re looking for.

What are your thoughts on this topic?

Please feel free to share them or to contact me directly.

Author: Maria Lyssenko

Maria Lyssenko studied Mechatronics at the University of Stuttgart where she specialized in computer vision. After her Bachelor degree, Maria did a research internship at Bosch in Plymouth (U.S., Michigan) where she worked on traffic light detection and classification applying classical computer vision techniques. Towards the end of her Master’s degree, Maria did an internship at Bosch Corporate Research in Renningen, Germany where she conducted research on image quality assessment for pedestrian detection in automated driving. Following her Master’s thesis, Maria intensified her knowledge of deep-learning-based perception using a neural network to learn a new image quality assessment metric for data quality quantification (“Quantitative Investigation of Data Quality Aspects on CNN-based Visual Perception in Automated Driving”). In December 2020, Maria started her doctorate at the Technical University of Munich on the topic “Leveraging Domain Knowledge for Deep Neural Network Safety”. The main scope of this work is the implementation of safety-aware metrics for criticality-driven pedestrian detection evaluation in autonomous driving.

Co-Authors

Further Information

The following resources provide a more in-depth understanding of computer vision-automated driving and its application for use cases of interest to Bosch.