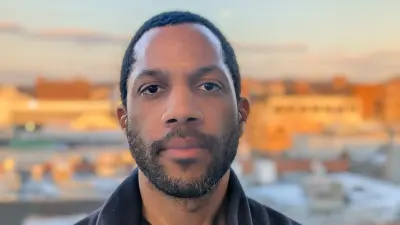

Jonathan Francis, Ph.D.

Senior Research Scientist, Robot Learning and Multimodal Machine Learning

I am a Senior Research Scientist at Bosch Research Pittsburgh, a Sponsored Scientist in the Robotics Institute at Carnegie Mellon University (CMU), and Adjunct Staff / Area Expert of Robotics in the Carnegie Bosch Institute at CMU. My primary research interests combine Multimodal Machine Learning and Robot Learning — developing autonomous systems that learn safe, robust, multimodal/multisensory, and transferrable representations of the world and are therefore capable of acquiring, adapting, and improving their own skills/behavior when deployed to diverse scenarios.

This work addresses key gaps that have previously limited the deployability of advanced autonomous technologies to Bosch production systems. With numerous publications and organized workshop events and challenges each year, I remain active in the robotics and AI/ML scientific communities; I frequently serve on the TPC or as an Area Chair, Editor, Associate Editor, or Scientific Advisory Board member for various conference and journal venues. I am also responsible for multiple cross-institutional collaborations, PhD student and junior researcher advisees, and publicly funded projects.

Please tell us what fascinates you most about research.

Research allows us to pioneer new possibilities, constantly evolve humanity’s knowledge, and solve problems that were previously thought impossible. As an industrial research scientist, it is all about balancing a career dedicated to scientific contribution with an opportunity (and responsibility) to leverage cutting-edge technologies to enable new efficiencies and capabilities of company products, services, and manufacturing processes; indeed, I think I most value the chance to blend theoretical breakthroughs in ML, AI, and Robotics with practical real-world applications. In the era of versatile large-capacity world and action models, developing autonomous systems that can reason about their surroundings, learn from demonstrations, monitor their own progress towards goal, and employ corrective actions to remediate their own suboptimal behavior has become increasingly possible. While the research journey is certainly not for the faint of heart, the prospect of contributing to the simultaneous development and application of these technologies is what drives me forward.

What makes research done at Bosch so special?

Bosch Research stands out because it is rooted in the practical needs of industry while still encouraging top-tier scientific exploration. Bosch has an incredibly diverse portfolio of research topics, with a large multi-cultural and multi-national spread. The collaborative environment between Bosch Research Pittsburgh and esteemed academic institutions like Carnegie Mellon University fosters a dynamic exchange of ideas, enabling the translation of complex research into deployable solutions. Additionally, Bosch's focus on sustainability, efficiency, and reliability ensures that our research not only advances technology but also aligns with broader societal goals.

What research topics are you currently working on at Bosch?

My work pursues improvements in the intelligence and adaptability of autonomous systems that are deployed to diverse environments. My current research focuses on advanced robotics — empowered with multimodal/multisensory Gen AI models — applied to production systems and factory intralogistics processes. On the modelling side, I am considering the following learning paradigms: (i) robot trajectory retrieval for augmenting test-time policy learning (kind of like RAG, but with robot trajectories), to improve data (re-)use; (ii) generalizing and adapting robot policies, online, via guidance from agentic frameworks (e.g., chain-of-thought reasoning, in-context learning, and code generation); (iii) multimodal/multisensory representation learning with, e.g., visual/vibro-tactile signals, for improved understanding of object contact physics, object affordances, and object surface properties in tabletop- and mobile manipulation settings; and (iv) cross-embodiment transfer learning to scale methods across different robot platforms. On the application side, we consider factory automation, such as dexterous tabletop manipulation for assembly, as well as mobile manipulation tasks such as assembly component fetch/retrieval, tool transport, and machine tending. We propose to combine all of the aforementioned capabilities into AI/Robotics dexterity “toolkits”, enabling line managers to get started with automating some complex tasks, out-of-the-box. Finally, we assess and facilitate the deployment of humanoid robots for factory automation tasks.

What are the biggest scientific challenges in your field of research?

Despite all the remarkable progress, there are still several hurdles to overcome in Robot Learning and Multimodal Machine Learning:

1. Data Efficiency: Reducing the dependency on large amounts of labeled data for training robust models remains a critical challenge, necessitating the development of more efficient learning techniques. Enabling systems to make use of the plethora of existing human demonstrations out there (e.g., YouTube) poses challenges in learning effective common representations. See Memmel et al., 2025; Yoo et al., 2025; Niu et al., 2025; etc.

2. Multimodal Fusion: Unifying concepts from diverse sensor modalities remains an open topic in representation learning, even with Gen AI. Each modality has its own noise characteristics and data formats, and it is difficult to combine them into efficient token or concept representations in ways that generalize across domains. See Tatiya et al., 2022, 2024.

3. Cross-domain Generalization: Ensuring that advanced learning techniques operate reliably at scale requires experience in dealing with different robot hardware platforms, in different environments, across different tasks, and with different objects and workspace configurations. While it may be tempting to train policies in simulation before deploying to real world settings, models must still grapple with the distribution shift between synthetic data and real-world observation (sim-to-real gap) that often stands in the way of reliable deployment. See: Huang et al., 2023; Tatiya et al., 2022, 2024.

4. Safety, Robustness, and Trustworthiness: From algorithmic transparency to reliable fail-safes, establishing trust in autonomous systems in high-stakes industrial settings is pivotal. See Qadri et al., 2025; Bucker et al., 2024; etc.

How do the results of your research become part of solutions “Invented for life”?

Learning via embodied interactions and having rich multisensory representations of the world are essential for autonomous systems to make informed decisions, perform safe actions, to adapt to changing environmental conditions, and to interact efficiently with humans and other agents.

Curriculum vitae

Since 2024

Adjunct Robotics Research Staff, Carnegie Bosch Institute, Carnegie Mellon University

Since 2022

Sponsored Scientist, Robotics Institute, Carnegie Mellon University

Senior Research Scientist, Bosch Research Pittsburgh

2017 to 2022

Ph.D. Student/Candidate, Robotics + Language Technologies, School of Computer Science, Carnegie Mellon University

2014 to 2022

Research Scientist I/II, Bosch Research Pittsburgh

Selected publications

Memmel et al., 2025

- Marius Memmel, Jacob Berg, Bingqing Chen, Abhishek Gupta, Jonathan Francis

- The Thirteenth International Conference on Learning Representations (ICLR 2025)

Yoo et al., 2025

- Uksang Yoo, Jonathan Francis, Jean Oh, Jeffrey Ichnowski

- arXiv

Niu et al., 2025

- Yaru Niu, Yunzhe Zhang, Mingyang Yu, Changyi Lin, Chenhao Li, Yikai Wang, Yuxiang Yang, Wenhao Yu, Tingnan Zhang, Zhenzhen Li, Jonathan Francis, Bingqing Chen, Jie Tan, Ding Zhao

- arXiv

Bucker et al., 2024

- Arthur Bucker, Pablo Ortega-Kral, Jonathan Francis, Jean Oh

- arXiv

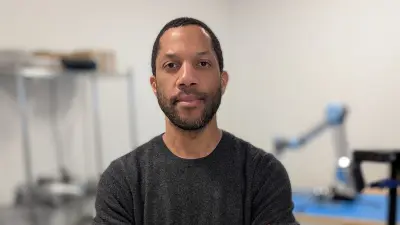

Get in touch with me

Jonathan Francis, Ph.D.

Senior Research Scientist, Robot Learning and Multimodal Machine Learning