Assembly assistance for the perfect assembly guide

Bosch Research engineers are developing a highly flexible, easy-to-train camera and AI-supported assistance system for manual assembly stations named DeepInspect.

A youngster who enjoys scale model-building has three bowls with pre-sorted clip-together pieces in front of him in his bedroom and, next to them, a booklet with detailed instructions for building a scale model of a cool racing car. He reaches for the necessary parts one by one and carefully puts them together. Until he picks up the wrong piece and puts it in the wrong place, that is. The boy becomes frustrated and it takes him a while to figure out where he went wrong so that he can get back on track.

There are around 1,000 manual assembly stations at Bosch’s plants. If errors occur at one of them or at one of our customers’ plants, this not only causes frustration but also leads to increased production costs. And the “models” are usually more complex than the sets which children build. An assembly guide which tells plant employees straight away if they have put the right part in the right position during a particular step would be extremely helpful.

Deep learning allows robust object recognition

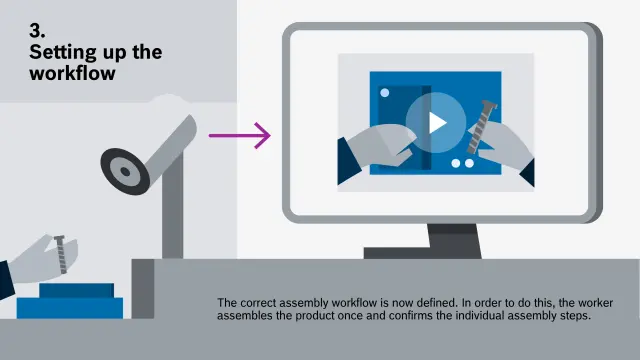

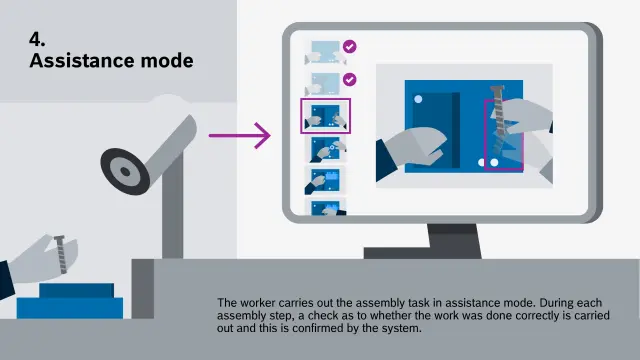

Bosch Research engineers are currently working on such a solution. DeepInspect is a high-performance, intelligent and easy-to-train assembly assistance system designed to provide help at manual assembly stations. In spite of automation, there is still some important manual work to be carried out at the plants. This includes production tasks which must be performed by hand because they require a high degree of dexterity, procedures involving items that come in small batch sizes where building a fully automated assembly line would not make economic sense, and processes requiring human flexibility which a machine cannot provide. However, this does not mean that machines and/or software cannot be used to help people during their work. This is where DeepInspect comes in. “Deep” here refers to the concept of deep learning, i.e. machine learning on the basis of deep neural networks, for example for image analysis. “Inspect” means insight or checking with reference to the technology used. “Using an intelligent camera at the production site, the assistance system can check whether or not the assembly process is going to plan while production is taking place,” says Eduardo Monari, Head of the Cognitive Industrial Vision research group, explaining the use of DeepInspect. He adds: “DeepInspect is like a digital assembly guide which visualizes the next assembly step in order to assist employees.”

In order to do this, an intelligent camera observes the step-by-step assembly of the product while it is taking place. If a step has been carried out correctly, the system automatically moves on to the next step. “It only observes and checks the products and components, not the assembly workers,” says Monari. The assistance system is also not designed to recognize assembly errors which could be traced back to particular employees. Instead, it is designed to look ahead to ensure correct assembly. “This perspective is important to us,” says Monari. “It focuses on what matters: Ensuring from the start that as few errors as possible actually occur and providing the best possible constructive support for employees in accordance with data protection requirements.”

Camera-based object recognition: Learning components made easy (easier)

Various systems designed to ensure that parts are put together correctly during complex assembly processes are available on the market. A range of solutions attempt to track and record the movements of people themselves in order to draw conclusions regarding the quality of the assembly steps undertaken. However, according to Eduardo Monari, users quickly find that this approach has its limits. Firstly, the processes involved can be too complex. Secondly, this approach falls short if the focus needs to be on positioning complex components correctly in a complex environment rather than ensuring that movements are ergonomically correct. Observing movements would therefore involve little more than an indirect check as to whether the assembly work was being carried out correctly. What is more, it would be neither efficient nor successful. “Some systems would also lead to critical questions regarding European data protection laws as they usually record whole people,” he adds.

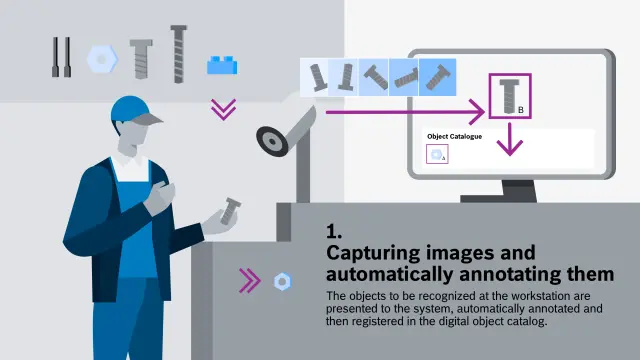

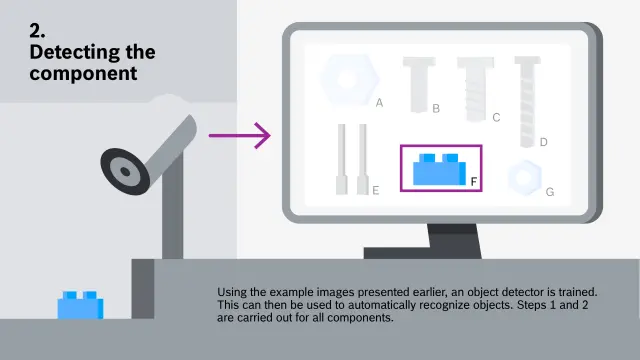

Other solutions already rely on camera-based intelligent object recognition, though the team headed-up by Monari would like to optimize this with DeepInspect. After all, “training” is a complicated, time-consuming process for people and machines. With conventional methods, several hundred different images of a component are needed to train the assembly assistance system in order to obtain a reliable result. The human trainers must then spend a long time filtering out any images that are not relevant and linking relevant images to so-called image annotations. “The required image annotation has always been the stumbling block when it comes to acceptance and using the system cost-effectively in industrial assembly – but at Bosch Research, we have worked on this successfully,” says Project Manager Matthias Kayser.

With the help of an intelligent image evaluation system, the new DeepInspect software filters out any images that are not relevant while the component in question is being photographed and annotated automatically. “This way, it takes just 15 to 20 minutes to prepare the training data,” says Kayser. Another advantage is that because the process takes much less time, the trainers and, consequently, the system technicians at the plant can work iteratively to achieve the optimum result. They first produce 100 images, test to see whether this is enough and, if not, produce additional images until the result enables reliable object recognition and checks. What is more, DeepInspect offers the benefits of a “smart, interactive assembly guide” from the very first iteration.

First prototypes in use

Bosch Research engineers are currently testing prototypes of DeepInspect. Together with the Mercedes-Benz Group AG, they demonstrated the assembly assistance system at the ARENA2036 research campus in Stuttgart using a battery assembly system as an example and tested it at a manual assembly station in a laboratory in Renningen. “We are currently developing specific pilot applications for four Bosch plants. These focus on very different production areas at Bosch,” says Monari. In the future, engineers at the plants will be able to train the interactive assembly guide for their product themselves with the help of the software – for happy employees at manual assembly stations.

Fact sheet Eduardo

Eduardo Monari

Dr. Eng. Eduardo Monari received his PhD in electrical engineering from the Karlsruhe Institute of Technology in Germany in 2011. Since 2018, he has been working at Bosch Research as Head of the Cognitive Industrial Vision research group (CR/APA2). He is also responsible for coordinating projects on AI in production systems at Bosch Research. His research background is in 2D/3D vision and signal processing, especially under uncontrolled and complex conditions. In this context, his research interest is always linked to the question as to how cognitive machines and systems can be trained or taught quickly, easily and with minimal effort.

Fact sheet Matthias

Matthias Kayser

Dr. Matthias Kayser earned his doctorate in the field of image-based object recognition for traffic monitoring at Goethe University Frankfurt. When he joined Bosch’s central research department, his main focus was the detection and localization of objects in the environment using artificial intelligence in production technology and industrial robotics. As part of the DeepInspect project, Matthias Kayser is researching neural networks that recognize industrial components for the assembly assistance system and methods for training these networks with limited and automatically annotated data. In addition to the technical challenges, he also enjoys the interdisciplinary collaboration involved and networking with other Bosch plants around the world, which has enabled the system to be tested in many different areas.