Learning visual models using a knowledge graph as a trainer

Bosch Research Blog | Posted by Sebastian Monka, Lavdim Halilaj and Stefan Schmid, 2022-07-28

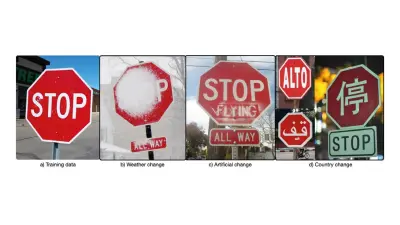

Most autonomous cars use cameras to navigate the real world. Computer vision (CV) is a field of artificial intelligence that enables computers to interpret and gain a basic understanding from digital images or videos. For example, when an autonomous car detects and identifies a stop sign, the car should slow down and stop. Recent approaches of CV utilize deep learning methods as they can accurately identify and classify objects given a large and balanced set of training data. However, it has been shown that minor variations between the training and application domain can lead to unpredictable and catastrophic errors. As illustrated in Figure 1, these variations occur naturally when the models are deployed in the real world.

Therefore, the main challenge for CV in autonomous driving is the enormous variety of contexts in which an autonomous car must function safely. For example, it must be able to reliably detect traffic signs under many different lighting and weather conditions.

Moreover, automotive suppliers and car manufactures face the immense challenge of developing CV-based solutions for autonomous cars that can also operate across country boarders. With state-of-the-art CV algorithms, this implies that the training of a neural network (NN) requires a balanced and representative set of training data for all the countries where the solution is released. This obviously poses a very high burden and increases the development time and cost dramatically.

The knowledge graph as a trainer

In collaboration with the University of Trier, we have developed a knowledge-infused approach for learning visual models. Knowledge-infused machine learning combines the best of both worlds: explicit and contextualized domain knowledge (symbolic AI) and data-driven machine learning methods (sub-symbolic AI).

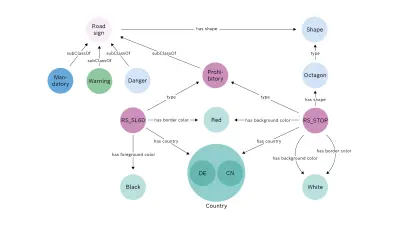

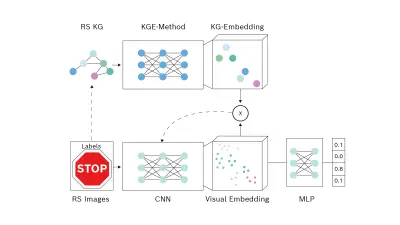

Specifically, our solution uses a knowledge graph neural network (KG-NN), in which the training of an NN is guided by prior knowledge that is invariant across domains and represented in the form of a knowledge graph (KG). The prior knowledge is first encoded in the KG with respective concepts and their relationships. Figure 2 illustrates an excerpt of the road signs KG (RSKG). The RSKG contains high-level semantic relationships between the road signs of the dataset. For instance, the stop sign (RS_STOP) has the shape Octagon, the type Prohibitory, and the countries: Germany (DE) and China (CN).

In the second step, the KG is transformed into a vector space via a knowledge graph embedding (KGE) method. Since deep learning methods operate in high dimensional vector space, we use this space to interact with the NN and to infuse prior knowledge.

A conventional NN is then trained with the supervision of the KGE. Using a contrastive loss function, the KG-NN learns to adapt its visual embedding space and thus its weights according to the knowledge graph embedding.

Evaluation – KG-NNs outperform conventional NNs

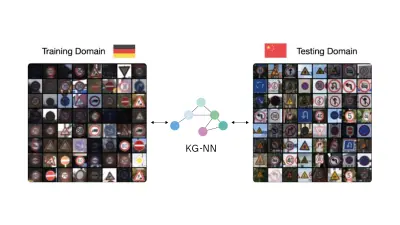

We evaluate our KG-NN approach on a visual transfer learning tasks using road sign recognition datasets from Germany and China. The results show that an NN trained with a knowledge graph as a trainer outperforms a conventional NN particulary in transfer learning scenarios, in which the real-world data differs from the training data.

We tested the KG-NN in a transfer learning scenario, in which a model is trained on only one dataset (Germany) and evaluated on another (China). Our solution outperforms a conventional NN by 7.1% in terms of accuracy on the unseen target data (China).

We also evaluated the KG-NN on supervised domain adaptation, in which a small amount of target data from China is available at training time in addition to the full training data from Germany. Our experiments showed that the KG-NN can adapt significantly faster to new domains than conventional NNs. With less than 50% of the images from China, the KG-NN outperforms the conventional NN trained on the full Chinese dataset [7].

Conclusion

Training robust NNs is crucial in real-world scenarios, especially for safety-relevant tasks such as CV for autonomous driving. However, conventional deep learning models fail if the real-world data is slightly different to the training data. Our work has shown that using a learning approach with a knowledge graph as a trainer (KG-NN) can significantly increase the robustness of the visual models in real-world scenarios. Moreover, the fact that our KG-NN solution can adapt faster to new domains, has the advantage of drastically reducing the amount of training data required.

Sources Figure 1:

[1] "STOP" (Public Domain) by dankeck

[2] "stop sign" (CC BY 2.0) by renee_mcgurk

[3] "2012-04-17%2015.45.54" (Public Domain) by mlinksva

[4] "ALTO" (Public Domain) by José Miguel S

[5] "Arabic Stop Sign" (CC BY 2.0) by AdamAxon

[6] "chinese stop sign" (CC BY-SA 2.0) by riNux

[7] Learning Visual Models Using a Knowledge Graph as a Trainer

What are your thoughts on this topic?

Please feel free to share them or to contact us directly.

Author 1

Sebastian Monka

Sebastian Monka is a research scientist at Bosch Research in Renningen, Germany, and a PhD student at the University of Trier. His dissertation research focuses on improving deep-learning-based computer vision by introducing symbolic knowledge from knowledge graphs. In particular, he works on transfer learning problems in the field of autonomous driving, i.e., the ability to apply deep learning models to different but related problems.

Author 2

Lavdim Halilaj

Lavdim works as a research scientist in the field of knowledge-driven machine learning. His primary interest is to investigate how prior knowledge represented in the form of knowledge graphs, encapsulating high level semantics can be leveraged and infused into machine learning models to enable autonomous driving. Prior to joining Bosch, he earned a PhD in Computer Science from the University of Bonn, while he worked at the Fraunhofer IAIS applying semantic technologies in use cases related to domain knowledge modelling and heterogeneous data integration.

Author 3

Stefan Schmid

Stefan is a Senior Expert at Bosch Research in the areas of Knowledge Engineering and Digital Twins. After his PhD at Lancaster University (U.K.) and nine years at NEC Laboratories Europe (Germany), Stefan joined Bosch in 2013 to work on research topics related to IoT, semantic data integration, and knowledge engineering. Stefan’s main interest is to investigate how semantically enriched and contextualized data and factual knowledge can be used to improve and automate data-driven applications, and help humans and machines make sense of the physical and digital worlds. Additionally, Stefan actively contributes to the Bosch Data Strategy project, where he establishes data architecture foundations and data management applications for a knowledge-driven company.

Further Information

Related Resources

The following resources provide a more in-depth understanding of knowledge-infused machine learning and its application for use cases of interest to Bosch.

Publications

- Learning Visual Models Using a Knowledge Graph as a Trainer (International Semantic Web Conference, ISWC 2021)

- A Survey on Transfer Learning using Knowledge Graphs (Semantic Web Journal, 2021)

- Infusing Contextual Knowledge Graphs for Visual Object Recognition (accepted at International Semantic Web Conference, ISWC 2022)

Articles on the Bosch Research Blog