Neuro-symbolic AI for scene understanding

Bosch Research Blog | Post by Cory Henson and Alessandro Oltramari 2022-07-19

Neuro-symbolic AI is a synergistic integration of knowledge representation (KR) and machine learning (ML) leading to improvements in scalability, efficiency, and explainability. The topic has garnered much interest over the last several years, including at Bosch where researchers across the globe are focusing on these methods. In this short article, we will attempt to describe and discuss the value of neuro-symbolic AI with particular emphasis on its application for scene understanding. In particular, we will highlight two applications of the technology for autonomous driving and traffic monitoring.

The topic of neuro-symbolic AI has garnered much interest over the last several years, including at Bosch where researchers across the globe are focusing on these methods. At the Bosch Research and Technology Center in Pittsburgh, Pennsylvania, we first began exploring and contributing to this topic in 2017. In this short article, we will attempt to describe and discuss the value of neuro-symbolic AI with particular emphasis on its application for scene understanding.

Neuro-symbolic methods reflect a pragmatic approach to AI, which can be distilled into three basic observations:

- In real-world applications, it is often impractical and inefficient to learn all relevant facts and data patterns from scratch, especially when prior knowledge is available.

- Knowledge/Symbolic systems utilize well-formed axioms and rules, which guarantees explainability both in terms of asserted and inferred knowledge (a hard-to-satisfy requirement for neural systems).

- Neural/Sub-symbolic systems make data-driven algorithms scalable and automatic knowledge construction viable.

It follows that neuro-symbolic AI combines neural/sub-symbolic methods with knowledge/symbolic methods to improve scalability, efficiency, and explainability.

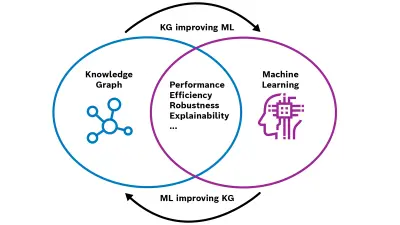

Over the last few years, neuro-symbolic AI has been flourishing thanks to new methods for integrating deep learning and knowledge graph technologies. Moreover, a variety of downstream tasks, including link prediction, question answering, robot navigation, etc., have been instrumental in benchmarking neuro-symbolic methods and evaluating datasets in a rigorous and reproducible way. As Figure 1 shows, neuro-symbolic AI can unfold along two symmetric pathways: a) symbolic priors can be used to improve learning; b) learning can be used to generate symbolic abstractions (e.g., ontologies, semantic frames). Such bi-directionality – now commonly referred to as a “virtuous cycle” – can apply to different levels of granularity, from specific application domains (e.g., autonomous driving) to general-purpose tasks (e.g., commonsense reasoning).

What is neuro-symbolic AI?

At Bosch Research in Pittsburgh, we are particularly interested in the application of neuro-symbolic AI for scene understanding. Scene understanding is the task of identifying and reasoning about entities – i.e., objects and events – which are bundled together by spatial, temporal, functional, and semantic relations.

In the following sections, we will highlight two use cases for improving scene understanding with neuro-symbolic AI methods. The first use case introduces knowledge graph embedding (KGE) technology and its application for autonomous driving: enhancing scene understanding from the perspective of an ego-vehicle. The second use case discusses neuro-symbolic reasoning and its application for traffic monitoring: improving scene understanding from the perspective of stationary cameras. In both cases, the scenes under consideration include moving objects (vehicles, pedestrians, etc.) and static objects (e.g., traffic lights, fire hydrants) perceived in the field of view over a relevant period.

Knowledge graph embeddings for autonomous driving

Knowledge graph embedding (KGE) is a machine learning task of learning a latent, continuous vector space representation of the nodes and edges in a knowledge graph (KG) that preserves their semantic meaning. This learned embedding representation of prior knowledge can be applied to and benefit a wide variety of neuro-symbolic AI tasks. One task of particular importance is known as knowledge completion (i.e., link prediction) which has the objective of inferring new knowledge, or facts, based on existing KG structure and semantics. These new facts are typically encoded as additional links in the graph.

In the context of autonomous driving, knowledge completion with KGEs can be used to predict entities in driving scenes that may have been missed by purely data-driven techniques. For example, consider the scenario of an autonomous vehicle driving through a residential neighborhood on a Saturday afternoon. Its perception module detects and recognizes a ball bouncing on the road. What is the probability that a child is nearby, perhaps chasing after the ball? This prediction task requires knowledge of the scene that is out of scope for traditional computer vision techniques. More specifically, it requires an understanding of the semantic relations between the various aspects of a scene – e.g., that the ball is a preferred toy of children, and that children often live and play in residential neighborhoods. Knowledge completion enables this type of prediction with high confidence, given that such relational knowledge is often encoded in KGs and may subsequently be translated into embeddings.

Neuro-symbolic reasoning for traffic monitoring

In neuro-symbolic reasoning, logic-based, deterministic methods are combined with data-driven, statistical algorithms: The former provide semantically transparent inferences, stemming from explicit axioms and rules but require significant, time-consuming manual effort to scale; the latter are effective mechanisms to automatically generalize from vast troves of data, although the underlying learning process occurs in a latent space representation, where semantic features remain implicit. It is out of scope here to illustrate how these different methods can converge to become reciprocally beneficial as the modes of integration depend on multiple factors, including the downstream task under consideration, the amount and type of data, knowledge, and computational resources available. What is important to highlight, however, is that by leveraging explainability and scalability, neuro-symbolic reasoning plays a central role in domains where scene understanding is key to inform human decisions and actions.

In the context of video surveillance, neuro-symbolic reasoning can be used to enhance situational awareness of a remote operator and reduce the human effort required to sift through 24/7 video streams from stationary cameras. Let us consider traffic monitoring applications: State-of-the-art computer vision can achieve high accuracy in classifying vehicles and pedestrians, in tracking their trajectories and inferring basic facts, e.g., assessing the volume of traffic by counting vehicles per lane. However, machine perception is insufficient when complex behavior is to be recognized. For example, consider a “near-miss” situation, namely an accident that “almost” happened (e.g., a car coming to a sudden stop before a jaywalker): Recognizing it requires counterfactual reasoning (“what might have happened if the driver had not braked”) based on visual cues (the car and the pedestrian’s sequence of relevant positions). Another interesting case, which goes beyond purely data-driven techniques, concerns detecting criminal activities, such as “breaking into a car”. This is an activity that is comprised of different causally related stages over an extended interval of time, and which depends on distinguishing anomalous from normal behavior – e.g., using a tool to force a car door open vs. inserting a key in the door lock. Both examples can be dealt with by a neuro-symbolic reasoning system in which a vision-based model trained with videos from stationary cameras feeds into a knowledge-based rule engine to deduce high-level features of scenes.

Bosch code of ethics for AI

The Bosch code of ethics for AI emphasizes the development of safe, robust, and explainable AI products. By providing explicit symbolic representation, neuro-symbolic methods enable explainability of often opaque neural sub-symbolic models, which is well aligned with these esteemed values.

What are your thoughts on this topic?

Please feel free to share them or to contact us directly.

Author 1

Cory Henson

Cory is a lead research scientist at Bosch Research and Technology Center with a focus on applying knowledge representation and semantic technology to enable autonomous driving. He also holds an Adjunct Faculty position at Wright State University. Prior to joining Bosch, he earned a PhD in Computer Science from WSU, where he worked at the Kno.e.sis Center applying semantic technologies to represent and manage sensor data on the Web.

Author 2

Alessandro Oltramari

Alessandro joined Bosch Corporate Research in 2016, after working as a postdoctoral fellow at Carnegie Mellon University. At Bosch, he focuses on neuro-symbolic reasoning for decision support systems. Alessandro’s primary interest is to investigate how semantic resources can be integrated with data-driven algorithms, and help humans and machines make sense of the physical and digital worlds. Alessandro holds a PhD in Cognitive Science from the University of Trento (Italy).

Further Information

Related Resources

The following resources provide a more in-depth understanding of neuro-symbolic AI and its application for use cases of interest to Bosch.

Articles on the Bosch Research Blog

- Assisting the technical workforce with Neuro-symbolic AI (Feb. 2022)

- Introduction to knowledge-infused learning for autonomous driving (June 2020)

- Six grand opportunities from knowledge-infused learning for autonomous driving (July 2020)

Publications

- Generalizable Neuro-Symbolic Systems for Commonsense Question Answering (Book Chapter, 2021)

- Knowledge-infused Learning for Entity Prediction in Driving Scenes (Journal Paper, 2020)

- Neuro-symbolic Architectures for Context Understanding (Book Chapter, 2020)

Keynotes, tutorials, summer schools

- Tutorial on Knowledge-infused Learning for Autonomous Driving, at the 2022 International Semantic Web Conference (Oct. 2022)

- Oxford Machine Learning Summer School (August 2022)

- Keynote on Knowledge-infused Learning for Autonomous Driving, co-located with the 2021 Knowledge Graph Conference (May 2021)

- Keynote on Ontologies for Artificial Minds, at the 10th International Conference on Formal Ontology in Information Systems (September 2018)