When a stop sign turns into a vase — Robust machine learning under worst-case perturbations

Bosch Research Blog | Posted by Wan-Yi Lin, 2023-07-20

As machine learning becomes more ubiquitous in Bosch systems and products, one question naturally arises:

How do we ensure that these machine learning systems work reliably under all circumstances for real-world deployments?

Especially when applying machine learning systems to safety-critical components — e.g., autonomous vehicles or automated control of building technologies — the stakes increase. Naturally, we at Bosch are driven to develop machine learning systems such that our clients can confidently put their lives in the hand of our products.

Funded by the Defense Advanced Research Projects Agency (DARPA) Guaranteeing AI Robustness Against Deception (GARD) and jointly developed with Carnegie Mellon University, Bosch Research answers this question by evaluating, improving, and guaranteeing adversarial robustness on machine learning systems. Adversarial robustness is valuable from the standpoint of providing and improving model performance under worst-case scenarios.

What are worst-case scenarios?

Worst-case scenarios are small changes to the input of machine learning systems that could cause false predictions. They can be as simple as an adversarial pattern displayed in the scene that causes a stop sign to be misidentified by image-based object recognition systems. Imagine an autonomous vehicle driving past a shop window with a poster for example. Then it suddenly drives through a stop sign without stopping, endangering road users.

Machine learning against worst-case scenarios

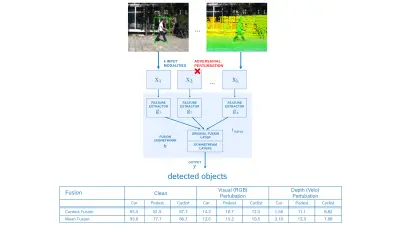

One may think that incorporating more sensor modalities to the AI system can improve robustness against worst-case scenarios. For one modality, since the rest of the sensor signals are not perturbed, jointly they should recover influence of the perturbed signal. Common modalities used in autonomous driving are 3D scanning and color images. We train a multimodal object detector (3D scanning along with color images) using public autonomous driving datasets and show that even if only one sensor signal, for example the 3D scanning, has worst-case noise in it, the performance of the object detector drops significantly.

Similarly, safe infrastructures are vulnerable under adversarial attacks, too. A common safe infrastructure is a multi-view system, where multiple cameras, including overhead surveillance cameras and cameras mounted on vehicles, face the same scene and each camera can exchange information with the others so that objects obscured in some camera views can still be inferred from other camera views. However, an adversarial object placed in the scene can attack many cameras at once and make the multi-view system fail.

How do you replicate a worst-case scenario?

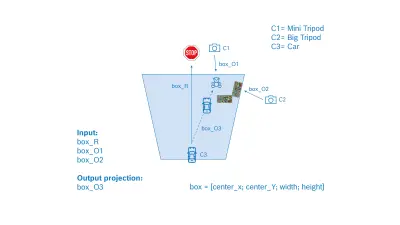

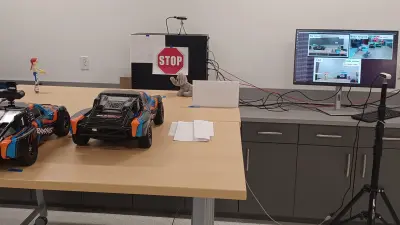

Our approach is to replicate the view of an autonomous car. In our experiment the detected objects are captured by a color camera mounted on a F1-tenth car with an object detector running onboard. This is to mimic autonomous driving inside an office.

The scene is captured from three different static camera views. We name the three cameras C1 Mini Tripod, C2 Big Tripod, and C3 Car. A pre-trained standard YOLOv5 object detector is operated on each camera. In this distributed setting, each camera shares information about its object detections with other cameras.

Loading the video requires your consent. If you agree by clicking on the Play icon, the video will load and data will be transmitted to Google as well as information will be accessed and stored by Google on your device. Google may be able to link these data or information with existing data.

In the beginning of the video, we see that two cameras (Big Tripod and Mini Tripod) detect an object with the bounding box name “teddy bear” and the same object is occluded for the third camera. These two cameras distribute information about the detected “teddy bear” and the third camera “car” estimates bounding box information of the occluded “teddy bear” and constructs a “virtual teddy bear” box in its perspective. Thus, the multi-view distributed perception system can help to detect such occluded objects.

Since the two cameras (Big Tripod and Mini Tripod) are exposed to the adversarial patch at the same time, the object detector operating on these cameras failed to detect the “teddy bear” object located near the adversarial patch. Since neither camera detects the “teddy bear”, the "car" camera assumes that there is no “teddy bear” in the scene, so no “virtual teddy bear” box is constructed.

This simple experiment demonstrates the vulnerabilities of state-of-the-art models and raises serious concerns about the applicability of such safety-critical applications in the real world.

These worst-case attacks are so strong that, they had not been taken into account when the AI systems were built, they could cause most of the AI system to fail, irrespective of the number of modalities or views. At Bosch Research, we are developing machine learning systems that are robust to adversarial attacks to ensure that the systems continue to function correctly even in a worst-case scenario.

About our project

As the largest publicly funded project in Bosch Research North America, over the past four years we have published 8 top-tier conference papers and generated more than 25 patents for Bosch in adversarial robustness for machine learning systems. We have developed a wide range of methods to improve or guarantee machine learning system robustness. For the adversarial attack scenarios discussed above, we proposed odd-one-out fusion [i], randomized input augmentation [ii], and rejecting uncertain samples [iii] for robust multimodal system or image classification. At Bosch Research we believe that the robust machine learning solutions we are developing will help build even more reliable Bosch AI products in the future.

What are your thoughts on this topic?

Please feel free to share them or to contact us directly.

Author 1

Wan-Yi Lin

Wan-Yi Lin is a senior research scientist at the Bosch Research and Technology Center in Pittsburgh, Pennsylvania, USA. She received her Ph.D. in electrical engineering from the University of Maryland, College Park. She has worked extensively on adversarial robustness, multimodal data for autonomous vehicles including video and LIDAR data, crowdsourced AI systems, anomaly detection, and forensics. She is Co-Principal Investigator (co-PI) on the DARPA project on Guaranteeing AI Robustness against Deception and has been co-PI on projects sponsored by the Airforce Research Lab, USA and the Department of Homeland Security, USA.

Author 2

Chaithanya Kumar Mummadi

Chaithanya Kumar Mummadi is a Machine Learning Research Scientist at the Bosch research and technology center in Pittsburgh, Pennsylvania, USA. He did his PhD work with Bosch Center for Artificial Intelligence in collaboration with University of Freiburg Germany, focusing on improving adversarial robustness and natural robustness of vision based AI systems. His experience also includes object detection and multi-object tracking for autonomous vehicles. His research interests are AI robustness, domain generalization, depth estimation and small objects detection. He is the technical lead for the DARPA project on Guaranteeing AI Robustness against Deception.

Author 3

Arash Norouzzadeh

Arash Norouzzadeh is a machine learning researcher at the Bosch Center for Artificial Intelligence. He earned his Ph.D. in computer science from the University of Wyoming and has since dedicated his research to developing sophisticated algorithms and models that leverage the capabilities of machine learning. With a particular emphasis on computer vision and deep learning, Arash investigates how these technologies can be applied to address intricate challenges in diverse domains. Through his work, Arash strives to advance the understanding and practical implementation of machine learning techniques, ultimately contributing to cutting-edge solutions for complex problems.

Further information

The following resources provide a more in-depth understanding of Robust machine learning and its application for use cases of interest to Bosch.