Radar deep learning — helping vehicles move into the fast lane

Using AI for accurate object detection

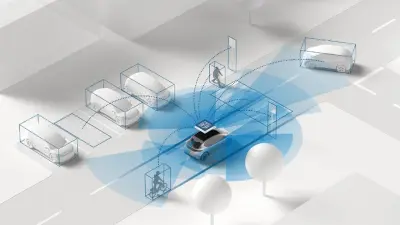

Automated driving functions improve the safety of road traffic, help it flow better, and make it altogether more convenient. If advanced assistance systems are to function safely and reliably, it is essential that the vehicle’s surroundings can be accurately mapped. This mapping involves amalgamating data from the vehicle’s different sensors. In this context, the state-of-the-art solution is to combine perception data from radar, camera, and — optionally — LiDAR systems.

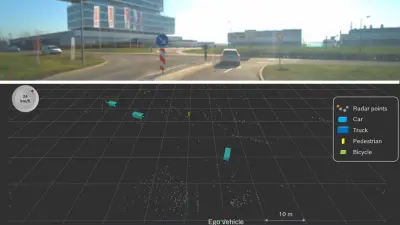

“Radar sensor technology in vehicles has very promising system benefits,” says Michael Ulrich, who is leading the AI-based Radar Perception project at Bosch Research. “Radar technology is robust and reliable, radar sensors are relatively affordable and widely available, and it is easy to incorporate them into vehicles — preferably in inconspicuous areas,” he continues. Nonetheless, there are certain challenges associated with radar data. This data is generally displayed as a point cloud, which has poor spatial resolution. Radar is based on radio waves that are reflected by objects. It provides information about the position of the identified object and how far away it is.

Object detection from a variety of sources

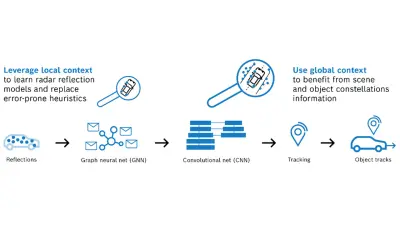

“Compared to radar, the information density of camera data is significantly higher, but cameras do not supply sufficiently reliable information when visibility is poor. To identify objects reliably, we consequently need a variety of sensors that operate independently of one another. The aim of our research project is therefore to use deep learning to train radar perception so it can help significantly improve object detection,” Ulrich says, describing his team’s approach to their research. The interdisciplinary team comprises ten experts from Bosch Research as well as specialists from the Bosch Cross-Domain Computing Solutions division, which develops, commercializes, and markets automated vehicle systems. “The interdisciplinary approach combining research and practical experience is proving really helpful in ensuring that we, the researchers, focus on issues and solutions that have a practical relevance,” Ulrich explains. After all, it is the research results that constitute the basis for the development of future products.

Spread across locations in Germany, the U.S., and Israel, the team members from Bosch Research are experts in perception, signal processing, and machine learning. At present, research into the deep learning approach is focused primarily on language processing and image recognition, two tasks where it also has a lot of practical applications. This research project is now applying the method to the interpretation of radar data. Deep learning uses what are known as neural networks, which form an extensive internal structure of hidden layers between their input and output layers. Each of these layers interprets an increasingly abstract representation from the incoming data. In the output layer, the processed information is amalgamated to produce the result — the identified object.

A “training camp” in the neural network

Huge volumes of data are used to train neural networks. In this case, the research objective is to correctly identify objects such as vehicles and pedestrians from radar point clouds — as well as their size, direction of movement, and speed. In this way, the network learns from a huge volume of radar point clouds and detected objects. Just as in human learning, both correct and incorrect solutions contribute to the progressive acquisition of knowledge. This accumulated knowledge will then be available to a fusion component in the real vehicle in an actual application. The purpose of the fusion component is to merge the data from the many different sensors and precisely map the vehicle’s environment.

“One of the next milestones in our research into perception will be a foundation model that integrates the different display formats of vehicle sensors,” says Ulrich, as he looks ahead to the future. By way of analogy with our everyday lives, we could essentially say that sensors — whether they’re different types of radar sensor or all the sensors in a vehicle — currently all still speak different languages. Extending this analogy, the purpose of a foundation model is to apply machine learning in a large neural network in order to find a kind of common language that all the different sensors can use to communicate. Moreover, the deep-learning process is to be extended to include radar signal processing to tap the full potential that comes from combining robust radar sensor technology with artificial intelligence for automated driving tasks.